2025 - Learnings & Thoughts

I am a bit late to this category of posts, mostly because I had no real idea how to approach it. Usually, I would write an industry look back and prediction, but honestly, everything is in such a state of flux that it did not feel right.

For myself, I always do a year recap and next year planning, but this contains a lot of personal stuff. But the year felt transformational, so I decided to write a public personal version.

I usually don't write that kind of stuff, so be nice to me, and maybe you'll find something interesting.

AI/agentic transformation of work

The capability leap

Let me start with a concrete example. In December 2024, I created a game for my daughter to reveal her Christmas present. She had to play through an asteroid clone to see what she was getting. Fun idea, worked as intended.

I built it using one of the early coding agents. Replit's automatic coding. And it was impressive. Half a year earlier, similar tools produced results that weren't really promising. This felt like a glimpse into what's possible.

But let's be honest. It was buggy. Getting those bugs out would have taken a long time. The game was good enough for a one-time Christmas reveal, not much more.

Now I look at what I'm doing with coding agents in December 2025, and it's an entirely different world. The progress in this space has been so significant that it defines a new chapter for how I work.

I know some people will flinch reading this. There's so much stupid stuff written about AI. So much ridiculous advice that you shouldn't follow. I get it. Take my perspective with a grain of salt as well. You have to find your own angle.

It's not a super weapon. But the capabilities have increased substantially in 2025. And my adoption of these capabilities has increased with it. When I compare how I work now to January 2025, about 80% has changed. This was a transition year in which I began relearning a different way of working.

I'm curious to see if 2026 brings more fundamental shifts or if it's mostly iteration on what happened this year.

Everything is a tool (the fundamental reframe)

This might sound obvious. But it was such a strong reinforcement that it changed how I approach things fundamentally.

Everything is a tool. And tools come with specific requirements.

The first requirement: you have to learn to use the tool. This was the essential thing with everything I did with AI this year. It was a complete relearning. Not incremental improvement. Learning a new tool from scratch. Understanding how to actually use it.

This relearning took much longer than I would have predicted.

I had to force myself into it. The way I did this was by taking something I can do pretty well, building a data stack with data infrastructure, something I've done enough times to know how it should look. And then forcing myself to implement it purely using agentic mode with Claude Code. No manual intervention allowed. No "let me check the code and adapt something." No, "this is actually not right, let me fix that." Everything had to go through the agent.

The first two implementations were painful. It took three times as long as doing it myself. Or even doing it in an AI copilot way. I rebuilt things so many times. It screwed up so many parts. At some point, it was really painful to watch.

But that was the learning process.

Along the way, I learned which kind of context I have to provide and in which way. How much I have to break down the things I ask for. What the process should look like when working on a specific kind of feature.

You might remember the early criticism. People saying "I asked Claude to do XYZ and it totally sucked, so the whole thing sucks." Well, yes. You buy a new tool, you don't know how to use it, you try to drill a hole. Usually doesn't end well. Same here.

The second part of the tool framing: not every tool works for every job. Not every tool is the best for a specific job. This sounds obvious too. But it matters when you're figuring out where AI fits and where it doesn't.

The real productivity gain isn't "4x faster"

When I figured out how to build a data stack mostly agentic, it didn't suddenly make me four times more productive. That's not what happened.

What it gave me was the possibility to go broad. To question things I wouldn't have questioned before because of resource constraints.

Here's the reality of classic consulting work. I have an agreed budget. The budget is based on my experience. So the project runs in a tight environment that doesn't allow me to say "let's see if I can do this model differently."

This was always painful for me. I love iterations. Build something, look at it, play around with it, run it in production, see some flaws, and then say "actually, this doesn't do the job well enough, why don't we change this part completely?"

Not possible in a classic data setup project. It would require telling clients they have to pay three times the price because they're not just getting my first implementation. They're also getting my iterations to make it better. Hard sell. "I know really well how to build it, but every setup needs some tweaks, so maybe you have to pay up." Not impossible to sell, but definitely harder.

Now with agentic implementation, I can actually do this. Same budget as before. But I can offer the same setup with more iterations on my end.

One project this year, I didn't have a blueprint. Had some ideas from similar projects, knew I wouldn't implement it the same way because I'd seen the flaws. I implemented my first idea. Then I iterated. Took two significant implementation shifts along the way. All within the budget I agreed on.

Because I could go broad. I could question things.

I could question parts of my data model from a tool perspective. "Do we actually need this layer? Does this model approach actually do the job I want it to do?" Turns out, a lot of times, no. It doesn't do the job well enough. So I refine it.

And it made me better at thinking about a job more intensively. What is the job of a staging model?

Before, someone would ask "why do we have a staging model?" and I'd say "it's a common approach, best practice." Now I can say "let's run an experiment without it and see how it works."

This is the real gain. Everything becomes a flexible tool. Question its role. See if it's still the right use case. Or don't use it at all.

Building is great again

I come from product. My first and true love is still product. Data is the second, but it could never match the feeling I have when I actually work on products.

A lot of things drove me away from product work. That's why I ended up in data and analytics. But here's the interesting thing: coding agents are taking away some of the parts that pushed me out.

In classic product setups, you have a very narrow window for solutions. Lean development, agile iterations, all that stuff is true in theory. In practice, it rarely happens. There's too much organizational pressure not to iterate. Investments in features have to pay off before you start working on them again.

It's super hard to actually take time to iterate until you have something really good. And I knew I couldn't be a person who finds something that works well for users in just two or three iterations. Almost no one can.

That always drove me crazy.

Now with coding agents, this changes. I can go into very different directions. I can experiment with approaches, not just ship and move on.

One thing I built this year, just for internal use so far: a different data stack. Not using dbt. Pydantic models under the hood. Everything is typed. It still has some layered data modeling, but it's more optional. We only do staging if we need staging. It looks more like function steps than "another transformation."

The only thing that came fixed was my paradigm, what I wanted to achieve. Then I tested different ways to implement that paradigm. Eight weeks of playing around with different approaches. Huge fun.

What I actually built: you define entities with activities in a Pydantic model. The model has primitives for how it maps source data to these entities. Then I created what Pete nicely called a compiler. I wouldn't call it that because it's a poor compiler. But it compiles the Pydantic models into Ibis code. The Ibis code can then work on any kind of source or destination system. Very flexible.

Two things make this great. First, it gives me a much more resilient data model to work with. Super easy to test. Second, agents love types. Everything is typed, so agents work really well with it.

This brought my personal joy back.

I'm working on something I'll publish very soon. A product I always wanted to exist. Too niche for anyone else to build. But not too niche to be sustainable. So I'm building it myself.

Next to it, I'm building some internal tools. A Mac application that's basically a crossover of Claude Code and n8n. Right now it generates my blog images and YouTube thumbnails. Helps me save interesting stuff I find on the internet. Already becoming a useful workbench.

The point is: I have fun building again. I now have the tools to build products how I always wanted to build them.

I suck at building products together with other people. I can do compromises in other areas of my life, but when I build stuff, I'm very bad at making compromises. Now I have a setup that doesn't require me to.

I talk to other people who are doing similar things. This isn't a single event. It's a massive event. I'm curious what comes out of this in the next years.

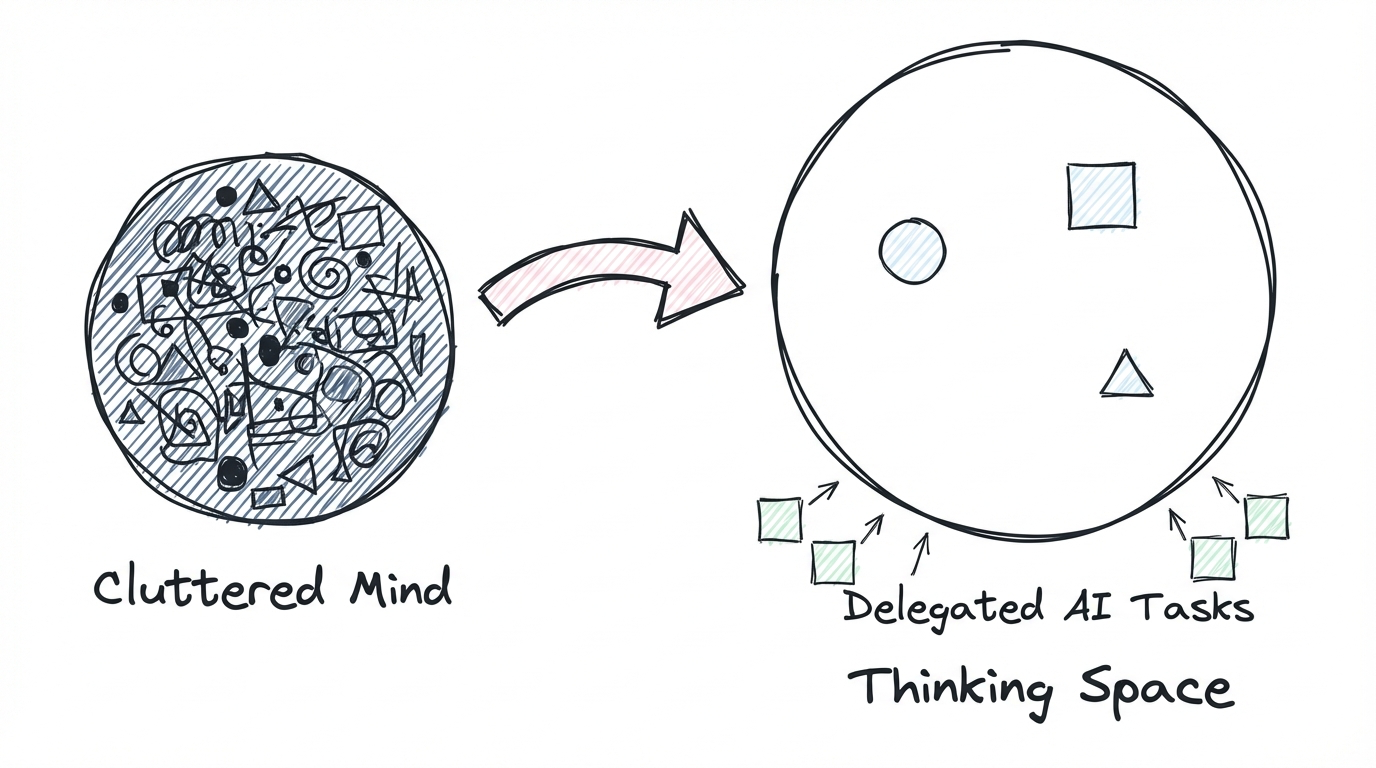

The brain adapts (but needs different things)

This one is more personal. Maybe I'm just seeing it wrong, some weird self-awareness issue. But I noticed something when I started experimenting with agentic workflows.

I was working on four different projects at the same time. Not doing the manual implementation myself. Giving feedback, refining context, improving prompts, providing feedback for next steps.

I got exhausted much faster. My energy to work ran out after an hour and a half. That felt really weird. Usually I can go for longer time spans. I can work deeper for longer.

My brain wasn't used to this. I was doing stuff I wasn't used to doing.

Maybe the reason is that I always avoided delegation work. I was never good at it. For someone in a classic manager role who delegates a lot, this type of work might feel natural. For me, it didn't.

But it turned out to be an adjustment. After pushing through and keeping the same way of working, the brain started to adapt. Now I can more easily work this way.

Still, my way of working is changing significantly.

I have to take different breaks. I have to free up a lot more space to think about things.

The setup of constant information inflow that I had before, social media, news, other stuff, lots of information coming in and still trying to find something new in it. That doesn't work for me anymore. People who made this change earlier would say "of course." For me, it needed this specific push to get there.

I need more space where I just think about things.

My content approach is shifting too. Before, when I wrote a blog post or created a video, it was very hands-on. "Here's how you actually solve something." I'm still doing that. But now I can feel that thinking about a topic, distilling it down, writing it out or creating a video, presenting it to people, getting feedback, incorporating that feedback, doing another iteration. This has much more value for me now.

I'm also starting to read more. Haven't done that for five or six years. I was an avid reader before, but I never felt the space for it. Couldn't slow down.

Why is this possible now? AI can do the implementation work that I usually had to speed up to get done. I can pass it on. "You do the implementation, I'll check the results, we'll see if it works out." That gives me the space to step back and think more.

I'm not a deep thinker. I'm more a variation thinker. I love going into different variations of things. This setup gives me the possibility to do that again.

Creating things (book and courses)

Writing and publishing a book

I started my book in 2023. Wrote it throughout 2024. Finally published it in 2025.

You can get the PDF here and I am now releasing it as a free web version module by module: https://timodechau.com/books/analytics-workbook/

One decision was essential to make it happen: pre-publishing. I made the book available when I had the first 100 pages. People could buy it early, get updates as I wrote more.

This was essential to actually finish. Without that pressure, I would have never completed it. I would have started, reached a point where I thought "maybe this isn't the right thing to do," put it aside. Maybe pulled it out again later. But I'm not sure I would have actually brought it out.

But I won't do it again.

Two major downsides.

First, awful user experience. People get a version they know isn't finished. They get updates after two weeks, maybe four weeks. I had planned a strict two-week rhythm. That turned out to be impossible. The time periods became irregular, which destroyed the experience.

People make notes in the PDF. New version comes out, they can't transfer their notes. Others missed updates entirely. "You wrote about this?" "Yes, one of the latest updates." "Sorry, didn't see it."

Second, it takes away the possibility to refine. I planned the book too big. The initial idea was too ambitious for one book. It ended up at almost 600 pages. One page text, one page illustration, so it doesn't feel like 600 pages. But still massive. It covers four different sections that each could have been their own book.

The book became a fundamental book even though I didn't want to write a fundamental book. If I hadn't pre-published, I would have made different decisions along the way. Probably split it into four books. Theory, Design, implementation, governance. Would have worked better.

But that's part of the process. I already have a clear idea for the next book and know how to do it differently.

Self-publishing is still my model. Having "O'Reilly author" in your bio has lost some value. Lots of people have it now. It's not worth compromising on how I want to write. I build my products on my own. I'm not good at building together with others.

The book sold over 300 times. Not massive, but solid. I got really nice feedback from people who learned essential things. Someone consumed the whole book through NotebookLM, just chatting with the content. That gave me the idea to create tracking plans with the book. Worked, but didn't cause a massive run on sales.

One thing is clear: a book gives you more authority than any other format. I do YouTube videos, blog posts, LinkedIn posts, had a podcast. They all give you a different kind of authority. But a book gives you "he's the one for this" positioning. Nothing else does that.

If you're thinking about writing a book, I highly recommend it. Don't overthink it. Find a very narrow angle so you can keep it short. Then go on the journey.

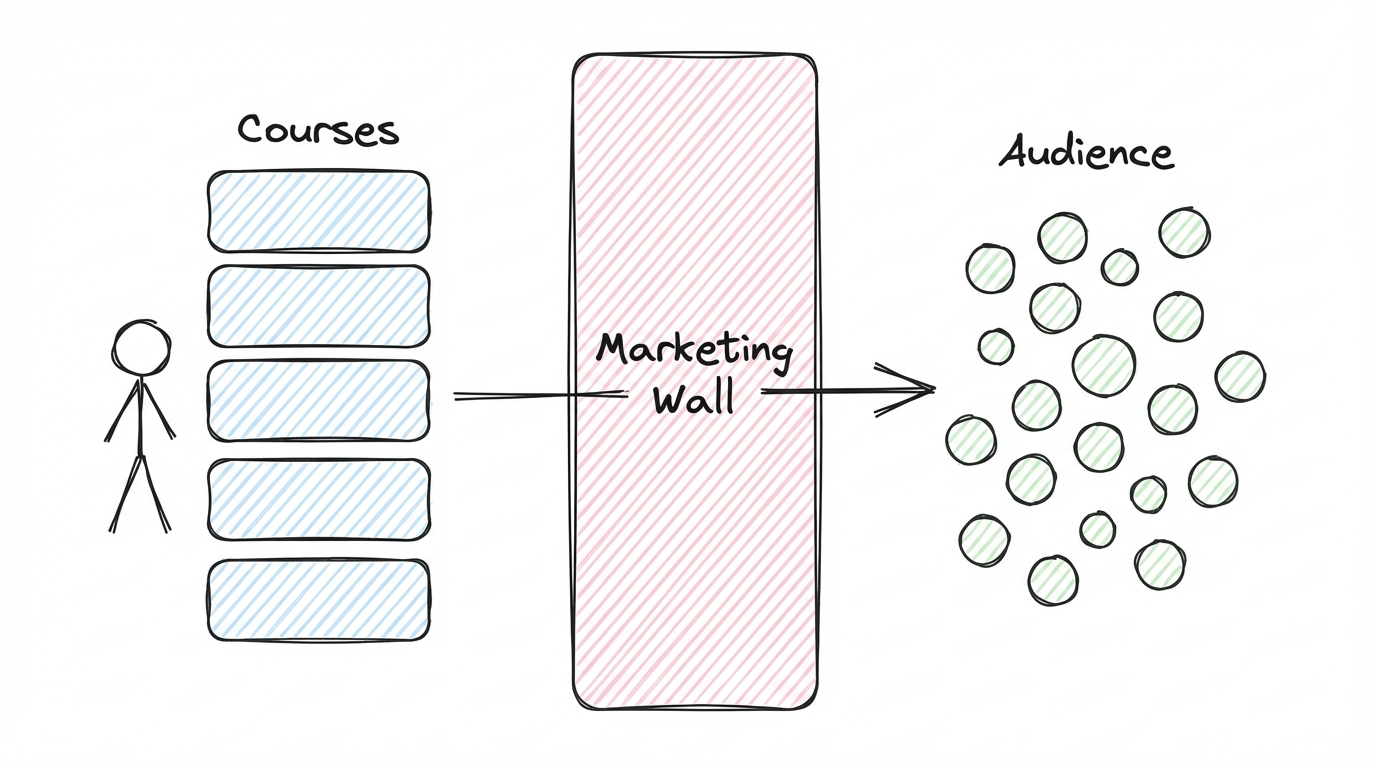

Creating and selling courses

This chapter isn't finished yet. Just some early learnings.

I went into 2025 with the idea to create three or four courses. Take a narrow topic, create something sophisticated, make it available. Stack them up. The more courses, the more it compounds into a nice passive income.

The idea is still valid. The problem was I got distracted.

I talked to some people, discovered others were interested in collaborating, and switched modes. Started creating courses in collaborations without recognizing the significant overhead that comes with it.

I learned there are two types of collaboration.

The first type: you both have some expertise or audience, you bring them together. I did one of these. The product was good enough to publish. But I can definitely do better. With this type, the sum of the parts is just the sum. Nothing more. Like those books where different authors write their perspective on a topic. Useful collection, but no magic.

The second type: you actually riff on each other's ideas. Extend them, bring them into different contexts. This can create something much better than the single parts. The collaboration I did with Barbara was like this. We expanded our knowledge because we did it together. The course we created was really good.

What paused the whole course thing was marketing.

My basic idea: create four or five assets, find a nice way to market them, generate around 100 sales a month. The more courses, the more it stacks up.

Turns out marketing for courses is much harder than I thought.

I did a course about it. The main insight was "if you have a massive audience, you can definitely sell courses." No shit. Obviously, I have a good audience, but it's a niche audience. That advice doesn't help.

I'm still experimenting. Currently reading "Simple Marketing for Smart People." What they describe matches my struggles pretty well. I'll try their approaches in 2026.

One good thing: because I've created a lot of this type of content now, I have an efficient production workflow. Especially when I do it on my own. I still have one collaboration course I want to bring out in 2026. I know that collaboration will work well, like the one with Barbara. And we already have a better angle for it.

More to figure out. But I still believe there's real value in courses. I buy them myself when they fit a topic I'm interested in. Marketing is just not easy. Even with a significant audience.

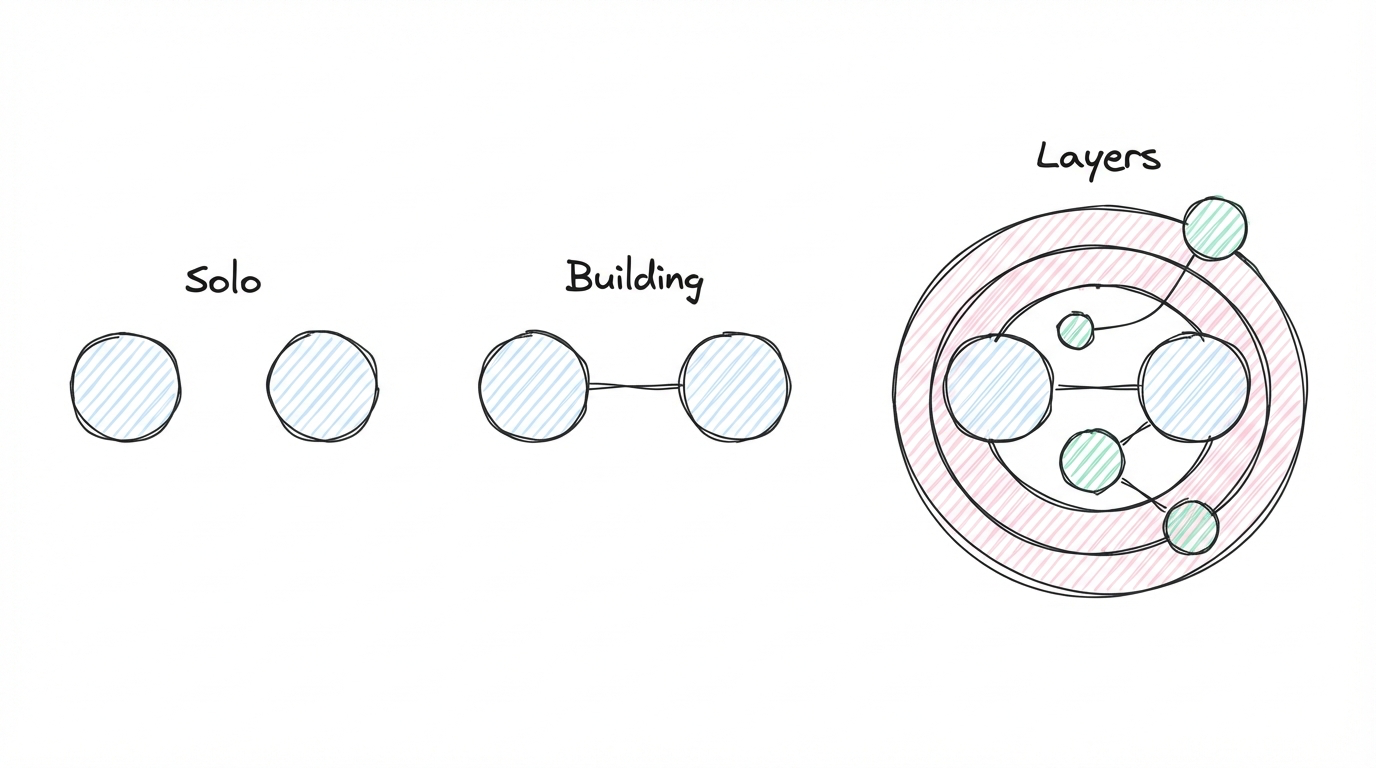

Working with others

Collaborations - finding what works

Maybe this doesn't apply to a lot of people, but it's essential for me.

I work alone. That's my default. One of the reasons I became self-employed was to test if I could do stuff on my own. It's not that I don't like working with others. But I definitely don't thrive in heavy team setups.

I tried different collaboration models throughout the years. Most didn't work out. The major mistake I always made: I wasn't good at picking the right people to work with. Not that these people were bad to work with. Our abilities just didn't match well. Same pattern when I had to hire in company roles. I could see I wasn't good at it.

So one thing I set for myself in 2025: be deliberate. Be careful. No jumping into things without being sure how they work out.

This approach worked a lot better.

I mostly did collaborations that were time-based. Always clear that we could say "this works" or "this doesn't work." I could adjust when things weren't going well.

One collaboration that worked really well was the one with Barbara. It started in 2025 and built up slowly.

We did a public course together. Did live workshops together. We have a weekly check-in where we discuss things we're working on. Often, these calls turn into general stuff where we exchange ideas about projects we're both struggling with.

You could see it adding up. Slowly but consistently. More and more layers.

At some point we said "maybe we want to do one or two projects together." Started doing them. Realized it actually works really well. We're very complementary in how we do things, but still have a lot of overlap in our views.

We'll do more in 2026. I'm looking forward to it. You can see some of it already here: https://fixmytracking.com/ - more of this to come.

After struggling to find the right model for years, here's my takeaway: working together with people can take time. That's okay. Don't rush into collaborations saying "oh my god, such huge potential." Even when it looks like huge potential from the outside.

The real potential shows when you actually work together. Let it develop.

Also need to mention four other collabs here: With Ergest, we have a three-week cadence for our check-in calls. These calls always leave me with new ideas, refinements, and just happy.

Juliana and I had big plans for 2025 - building a community together. But reality got the better of us, which is fine. But I enjoy every minute we find time to chat about things. And we still have our course, which we will make happen in 2026.

And I am happy that Pete and I found more time together by the end of the year. These calls are super deep and extremely nerdy. But similar fuel for new ideas (like building a product).

And finally, every exchange with Robert (I know that you are not such a call person) is a great exchange about anything, like agentic coding and data platforms.

And beyond that are the calls and chats with other people I had in 2025 - you all know who you are - thanks for these and your feedback and ideas.

Having a coach

This started in 2024 but really expanded in 2025. Working with Stefan: https://revolutioncoaching.de/home/en

One thought I have about this: I should have done this very early in my career. And then continuously. Always have one or two coaches.

I've been working with Stefan for over a year now. It helped me significantly to work on some fundamentals.

Everything positive I describe here, getting better focus, getting clearer on priorities, knowing where I want to go. I think this was only possible because of the work we invested before.

The interesting part is how differently it turned out from what I expected.

When I started looking for a coach/mentor at the end of 2024, I was looking for business advice. I thought my major weakness was my entrepreneurial mindset. I'm not the kind of person who is really good at going after the money. Making a lot of money. So I thought I needed someone to help with that.

It turned out completely differently.

We started the mentorship and almost immediately went in a different direction. We worked a lot more on fundamentals. How do I see myself? What kind of goals am I actually setting?

In the end, I learned that I'm actually not that bad at the entrepreneurial stuff. I just had a very unusual way of doing things. The mentorship helped me look better at things I had already achieved. And then from there, find the next step.

If I could recommend this to my former self: start two or three years into working. Always have someone to check in with about where you're going.

I had informal versions of this before. Managers or other people within companies who acted like that to some degree. But it was never formalized. And especially when I became self-employed, that part was definitely missing.

I'm happy I added it. I'll definitely keep it. I have some people I can check in with on different topics now. Getting different perspectives. Having someone help you take a step back.

Personal operating system

Someone like me will never become a good focuser. It's not my personality. 2024 and 2025 were years of exploration. An idea came, I went deep on part of it, a new idea came across, I added it. Testing a lot of directions.

I have a stupid constraint: I have to actually do things to know if they work. I can't just look at something and predict how it plays out. I would love to be one of those people who can see something, visualize it, and already get a good idea of how it will develop. That doesn't work for me. I actually have to do the thing.

So I tested a subscription model for specific content. Learned what works well: the connection to people who subscribe. Learned what doesn't work well: the pressure to continuously deliver value. I expected it to be challenging. I needed to see how challenging. And if I could work around it or not.

The problem in 2025 was testing too many of these things. Creating too many isolated islands that don't play together.

You can test as long as everything moves in the same direction. But when I look at my content strategy, there's no strategy. I pushed out so many different types of content with no clear structure of where they should end up. I never really found that structure.

In the second half of 2025, I invested a lot more time in sessions with myself to think.

AI helps here. It's a good soundboard. You can throw things against it, see different angles. I created a specific setup that knows about my weaknesses and strengths.

Now I have the idea of what I want to achieve in the next few years. I'm getting better at breaking it down. Every new idea, I can run it against the path I have in mind. Does it actually fit? Or does it create too much overhead? Does it move me away from what I actually want to achieve?

That was really helpful.

For 2026, I reduced it to three monetary focus areas. I would prefer two. That's something I'll work on. Maybe I can get rid of one.

Everything else feeds into these three outcomes: sign up for the product I'm building, hire me and Barbara to build a better marketing data stack, or hire me for advisory work with your data team or product team to get a better product analytics setup.

At least I have clear outcomes now. I'll check along the year if these three should still be three. Two would be fine for me. I might end up with one. But one would mean not hedging bets. Giving up some freedom. I don't know if I want that.

Good to get clarity on this.

If you read until here, thanks for staying that long. I usually don't write personal posts, but from time to time, it helps to sort things. And maybe 1-2 things are helpful for you as well. Let me know (via email).

Have a great 2026.